In today's fast-paced world of data-driven information technology, implementing machine learning (ML) solutions at scale is becoming a key component of the success of many businesses. In this article, we will discuss the MLOps approach used in Algolytics Technologies solutions, focusing on the aspect of cost and computational efficiency, as well as data/ML model orchestration.

We will also present the results of benchmark and performance tests showing how the right choice of tools can affect the maintenance costs of MLOps environments and their scalability.

At the outset, I want to put forward a controversial thesis. Is it possible to implement ML quickly, cheaply and well? In our opinion, yes. And how and with what tools we will discuss next.

What is MLOps

MLOps (Machine Learning Operations) is a set of practices that combine machine learning (ML) and operations (Ops) to automate and streamline the processes of deploying, monitoring, and managing ML models in production. With the implementation of MLOps, we want to achieve:

- High business effectiveness – good quality decision-making processes supporting our business

- Scalability: The ability to deploy ML models at scale, supporting millions of queries per day.

- Automation and reliability: Reduce manual intervention by automating the processes of training, deploying, and monitoring models. Minimise the risk of errors

- Consistency: Ensuring consistency and repeatability of ML model results across environments.

- Speed of deployment: Reduce the time it takes to deploy new and update solutions based on machine learning models.

- Model lifecycle management: Efficiently manage the entire lifecycle of ML models, from training to retirement.

The term MLOps derives from the older concept of DevOps, which refers to standard software development processes. This is because the ML model and its implementation are specific IT systems.

Key barriers to the uptake of ML-based solutions

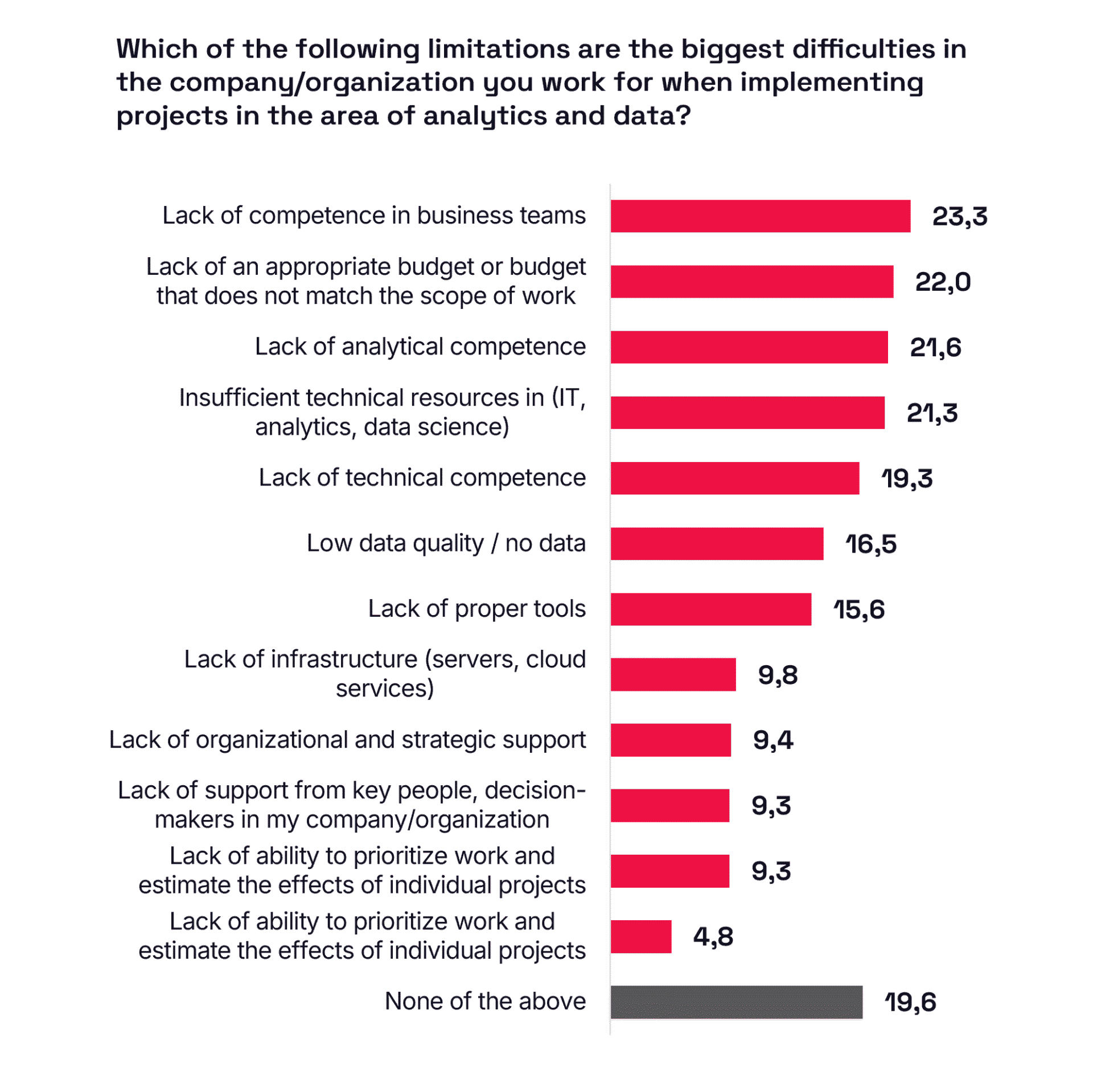

Algolytics Technologies conducted a study on the state of maturity of Polish companies in the area of AI/ML and advanced data analytics. About 700 respondents took part in the survey, including data scientists, ML engineers, and MLOps specialists.

One of the puzzling results was that only 20.5% of respondents declared that their organizations use machine learning technologies for advanced data analysis, even though these technologies have been available for years and offer a wide range of possibilities for automating analyses and processes.

This raises the question: why does this situation persist?

The answer can also be found in the results of the survey. Here are the most important barriers that have been identified:

- Lack of competence in business teams: 23.3% of respondents indicated that the lack of relevant skills in business teams is a significant obstacle.

- Lack of adequate budget: 22.0% of respondents noted that the budget is not aligned with the scope of ML/AI implementation work.

- Lack of analytical competence: 21.6% of the survey participants indicated insufficient analytical skills.

- Insufficient technical resources: 21.3% of respondents pointed to a lack of adequate technical resources, such as IT infrastructure and analytical tools.

- Lack of technical competence: 19.3% of respondents indicated a lack of technical skills in teams.

Source: Algolytics Technologies, "Study: Assessment of the maturity of Polish companies in the area of data analysis and AI", March 2025.

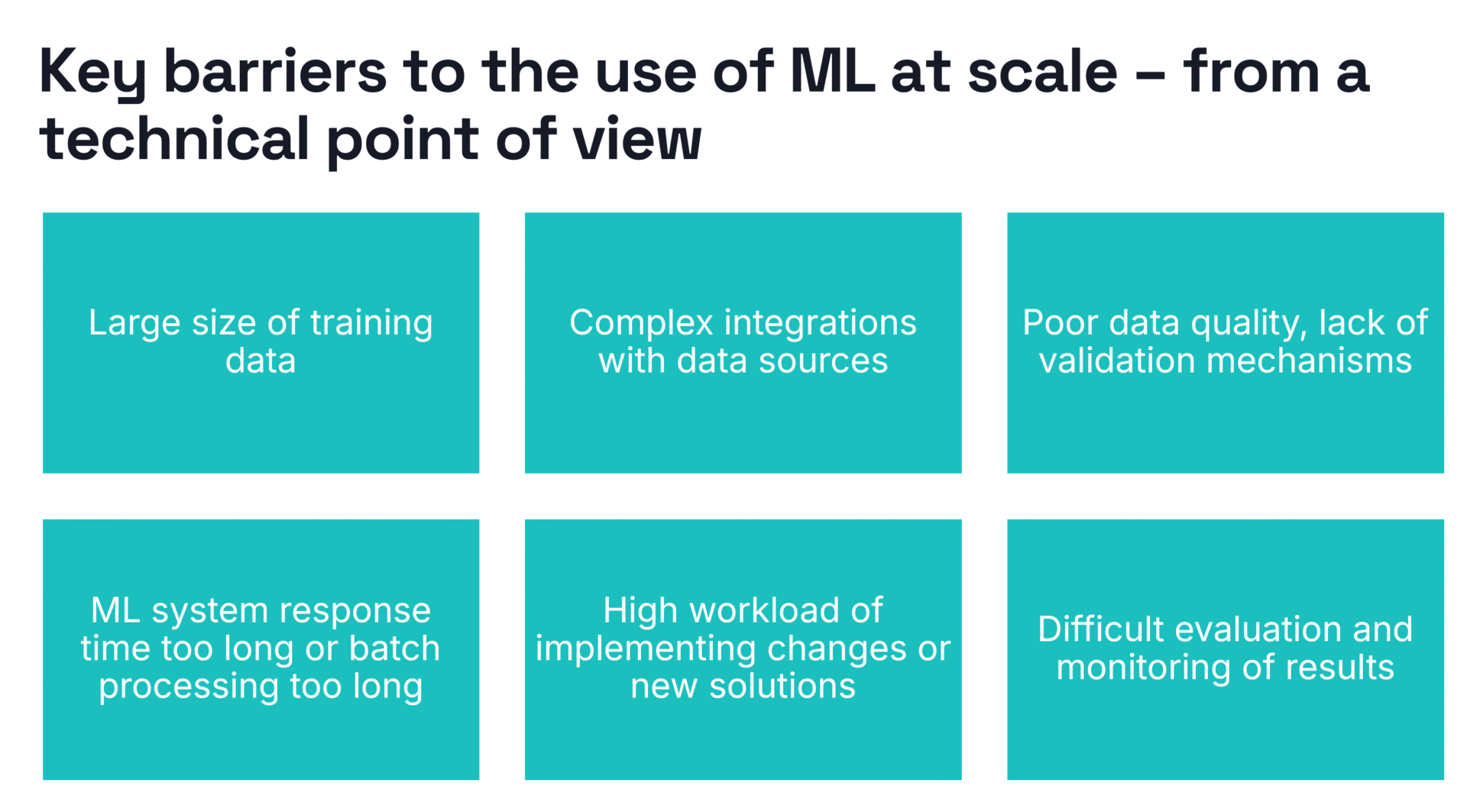

To sum up, the barriers to the dissemination of machine learning techniques are primarily the lack of competence in teams, as well as costs and problems of a technological nature. At Algolytics Technologies we know that the right tools to support the Data Science team and data engineers are important for the smooth operation of the MLOps process.

That is why we are developing a platform that addresses key problems in the MLOps area – automation of ML model construction, data integration, team support (bridging competence gaps), scalability and cost-effectiveness

Why is deploying ML models challenging?

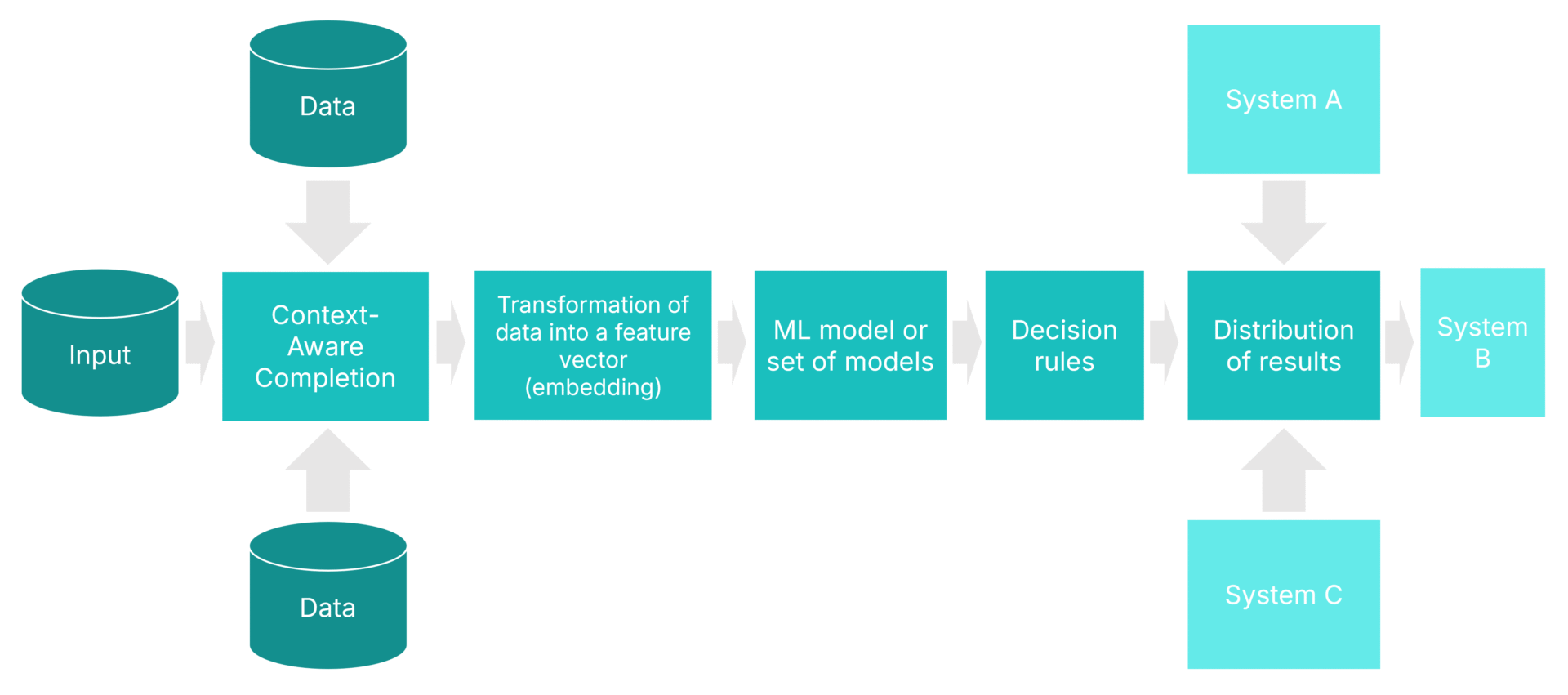

Let's look at a typical model for deploying ML models into a business process. Typically, machine learning models are used to automate accurate, fast, and large-scale decision-making. However, the ML model itself, implemented as a service, usually does not handle the entire decision-making process.

The model needs to be fed with data, which can come from multiple sources and may require complex integration. Then, the data must be transformed into the form required by the ML model – most often a feature vector (aggregation, embedding). Only at this stage can we use one or more models.

But that's not all. The prediction/recommendation of the model is most often interpreted and transformed using a set of rules.

Simplified ML model deployment template

In the end, we get a fairly complex application, integrating with many systems, performing complex operations on data. Such an application can be monolithic or consist of many microservices.

What is the conclusion? To implement an ML model, strictly IT resources and competencies are necessary. The implementation of the ML model thus becomes a typical system implementation project and requires the team to have analytical, IT and business competencies. And it costs!

However, let's assume that our implementation was successful. Already at the stage of operation, further problems may arise, such as problems with scaling, too slow responses of the decision-making system, or issues related to handling problems with data quality and validation of the quality of the result.

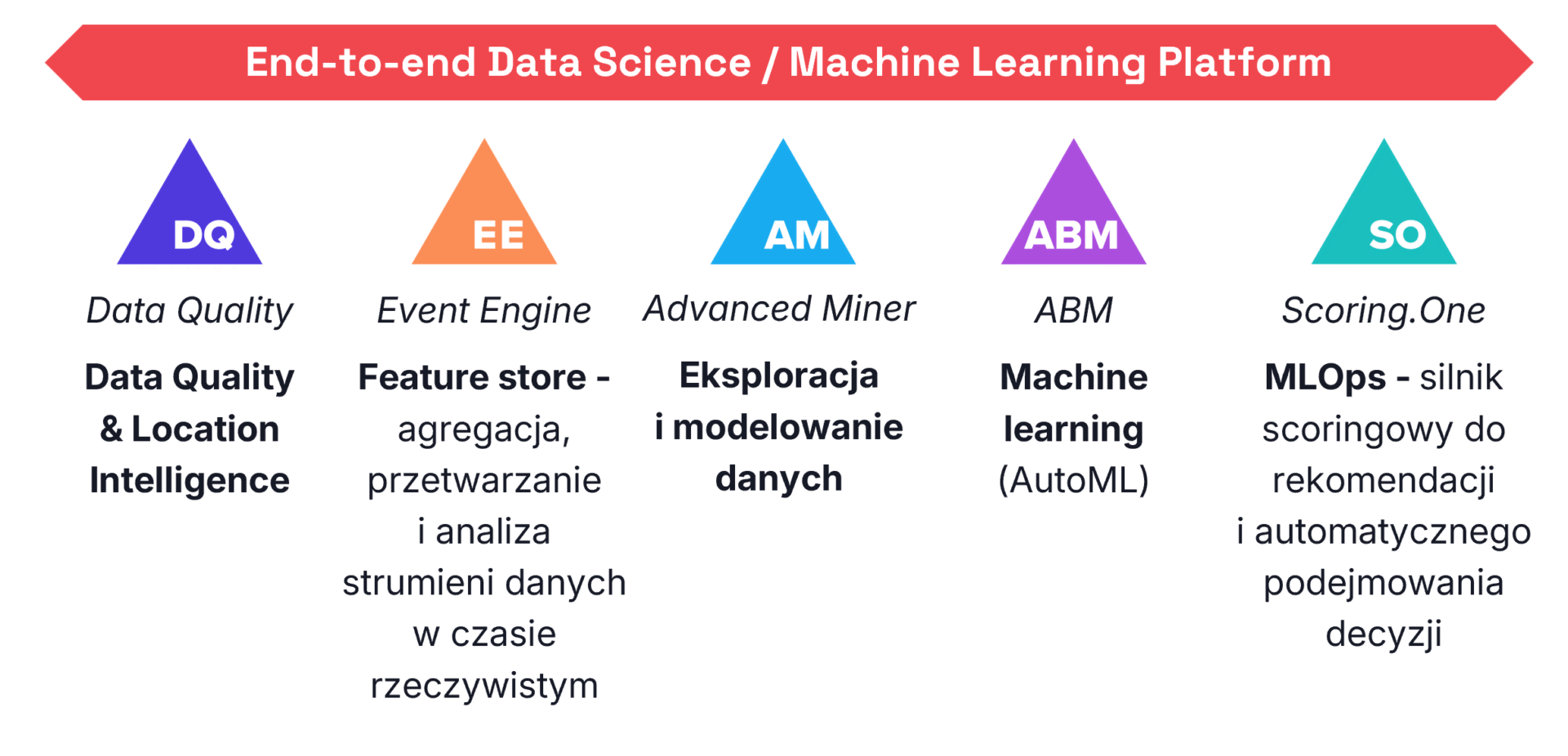

Can we eliminate and solve these problems with an effective MLOps platform? At Algolytics Technologies, we have found a solution for this – we are creating a platform that, thanks to the use of automation, a low-code approach and the integration of all key functions in one tool, The key components of the ML implementation platform are:

- AutoML (ABM) module for fast production of effective ML models. More info

- MLOps engine (Scoring.One) combining functions such as repository model, decision engine, orchestrator and data integrator More info

- In specific cases, where we process high-scale data streams, an online feature store (Event Engine) will also be necessary More info

More information about the platform can be found here: https://algolytics.com/#PLATFORM

Comparing the performance/scalability of the Algolytics Platform to cloud and open-source solutions

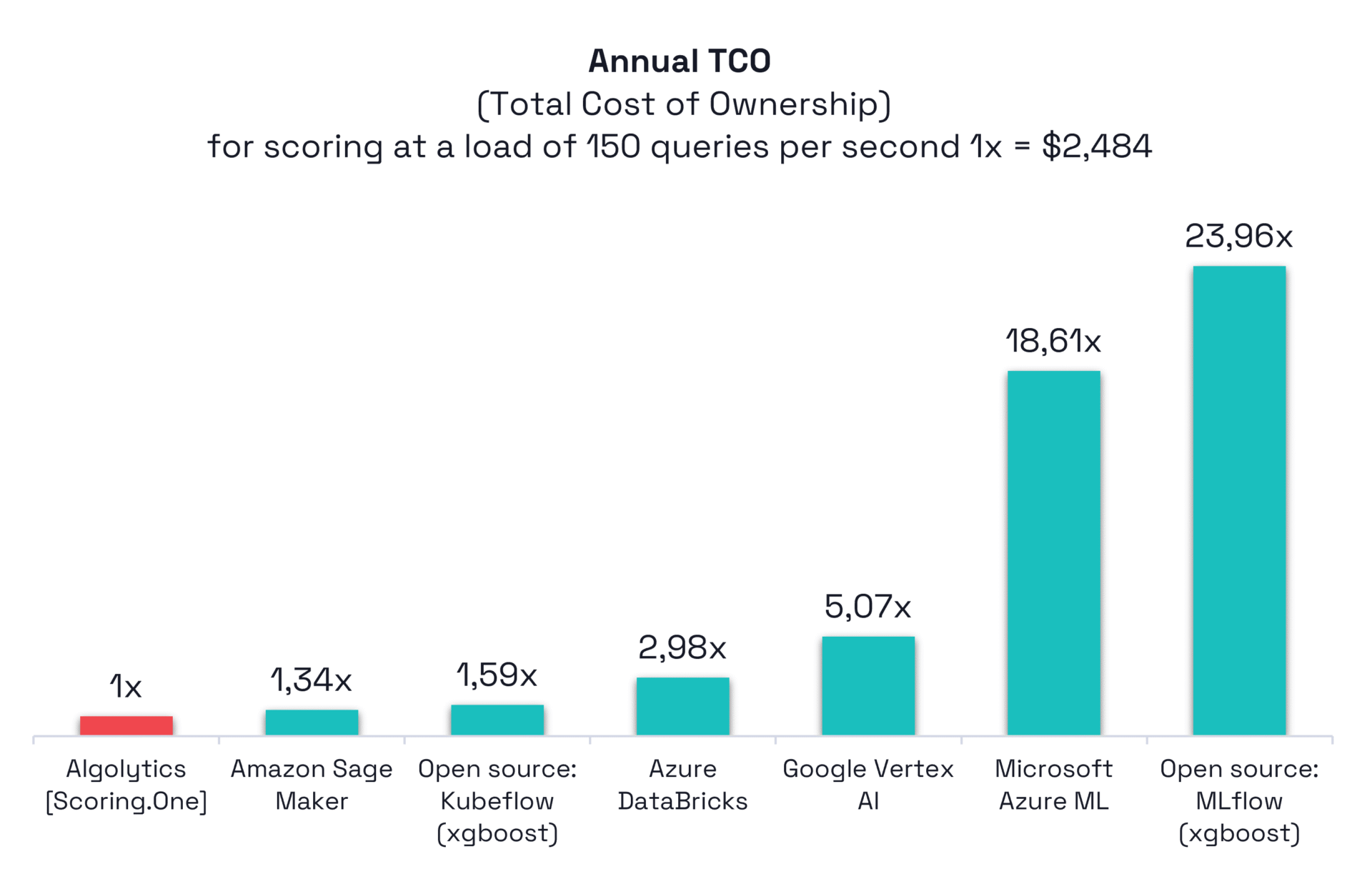

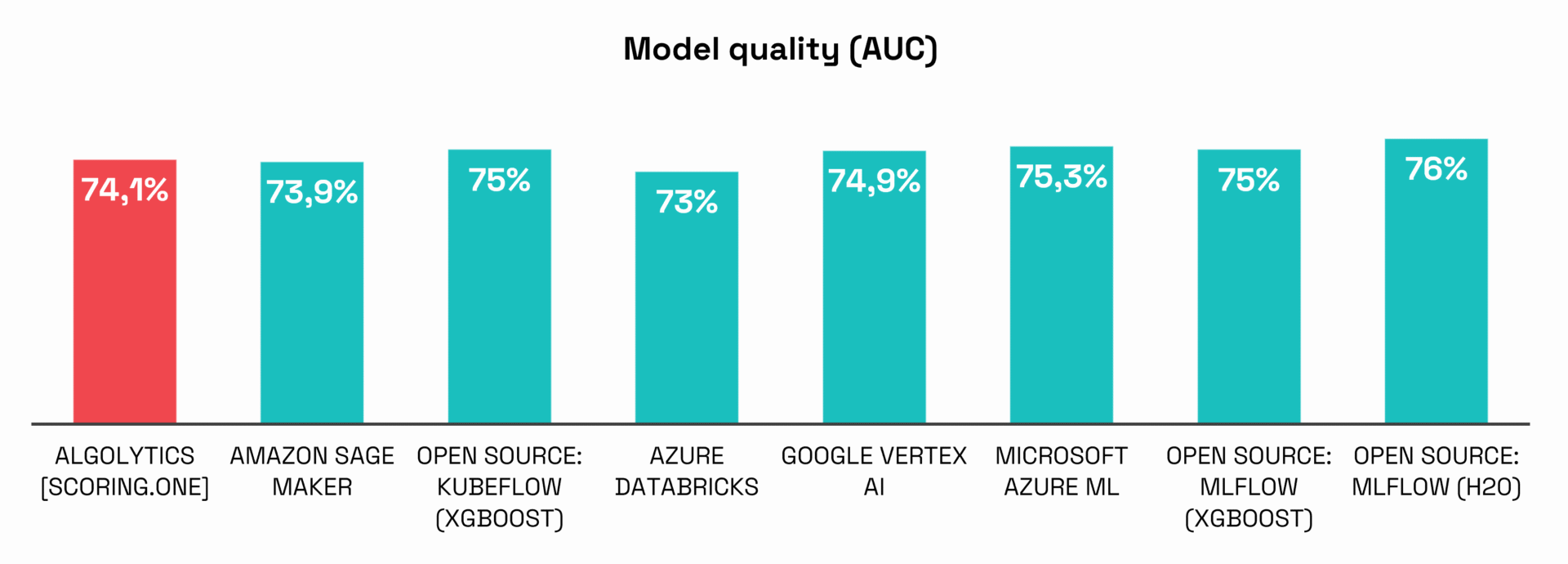

Before we discuss how the Algolytics Platform solves the problem of scalability and supports teams in implementations, we will discuss two tests to show how the choice of technology affects the cost and scalability of the solution.

The first concerned a simple classifier model launched as a web service, and the second a more complex recommendation system based on 100 predictive models. The results show that our solutions are not only more efficient but also much cheaper to maintain.

Example 1: A simple classifier model

The first example is for a simple classifier model running as a web service with a workload of 150 queries per second. For the test, we prepared data with an average size of 50 variables, 650,000 observations, but "malicious" in the sense that they are difficult to model with simple statistical models.

We trained the models using 7 platforms and then implemented the model as a service using the mechanisms available in a given platform:

- Algolytics Platform – model trained in ABM, implementation in Scoring.One

- Amazon SageMaker – autoML module (Canvas) + automatic deployment

- Google Vertex AI – autoML+ automatic deployment module

- Microsoft Azure ML – autoML+ automatic deployment

- Azure DataBricks– autoML+ automatic deployment

- Open source – Python XGBoost + ML Flow as an MLOps platform

- Open source - Kubeflow - autoML + automatic deployment

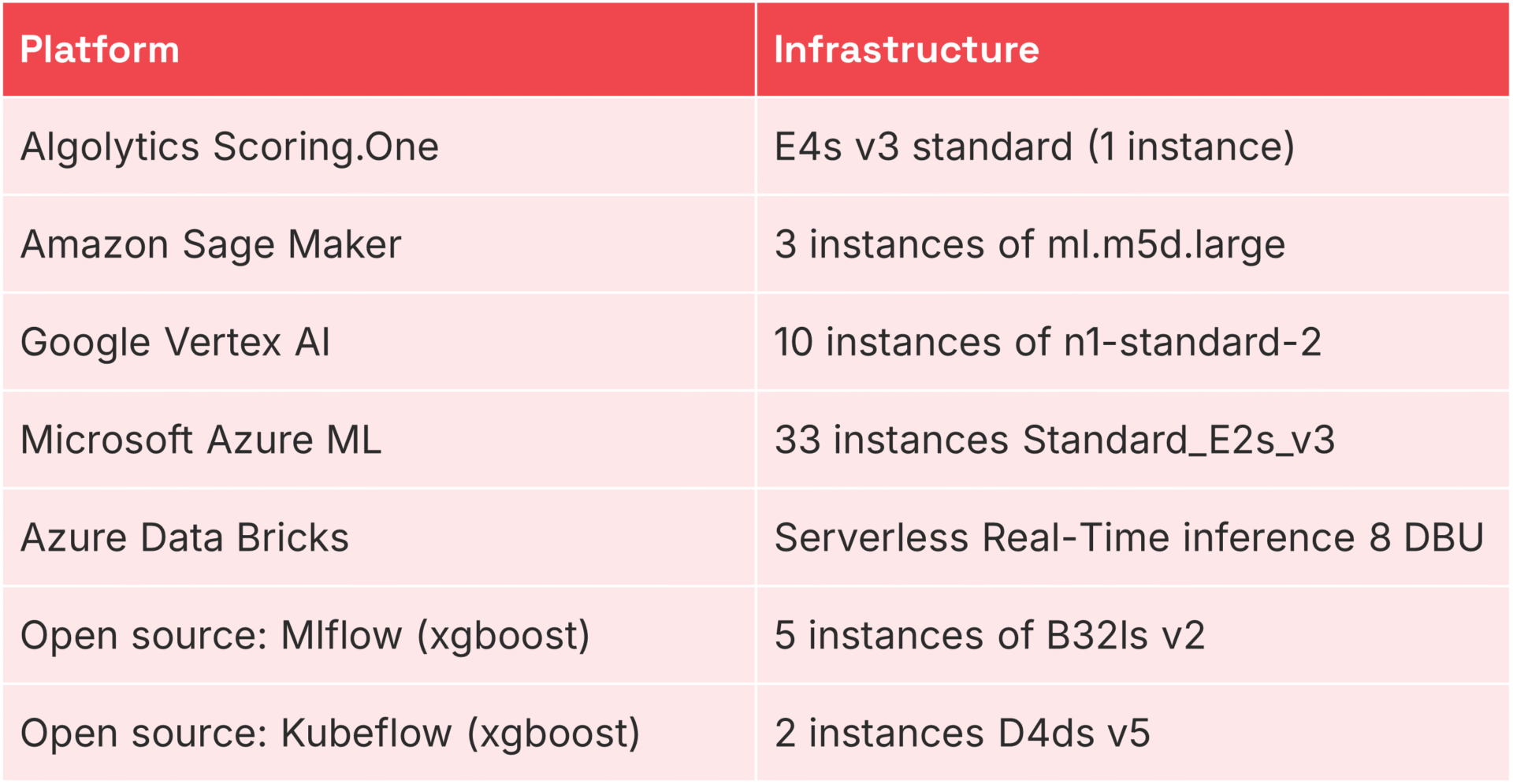

For each environment, we selected the infrastructure (experimentally) in such a way as to obtain the cheapest solution to maintain for a constant load of 150 queries/s.

Infrastructure necessary to maintain a load of 150 req/s

Experiments have shown that the cost of maintaining such an environment in Algolytics Technologies is much lower than in the case of popular cloud platforms such as Amazon SageMaker, Google Vertex AI or Microsoft Azure ML. The quality of the models, measured as predictive performance, was very similar, showing that high efficiency can be achieved at a lower cost.

Example 2: Complex recommendation system

The second example concerns a more complex recommendation system based on 100 predictive models. This system generates recommendations for any address point, suggesting the type of service with the highest potential out of 100 possible.

The models were trained on a set of 9.3 million observations and 405 variables + 100 target variables.

The implementation scheme itself combines running ML models and the aspect related to data integration. The input process receives an address, then uses the Algolytics DQ service to resolve the address and attach a set of 700 spatial features. Then, 100 predictive models are run in parallel to generate a look-alike score of the service's matching to the location. The last element of the process compares the results and builds a recommendation list.

You can test the endpoint HERE. (the address parameter contains the analyzed point – support only for the territory of Poland)

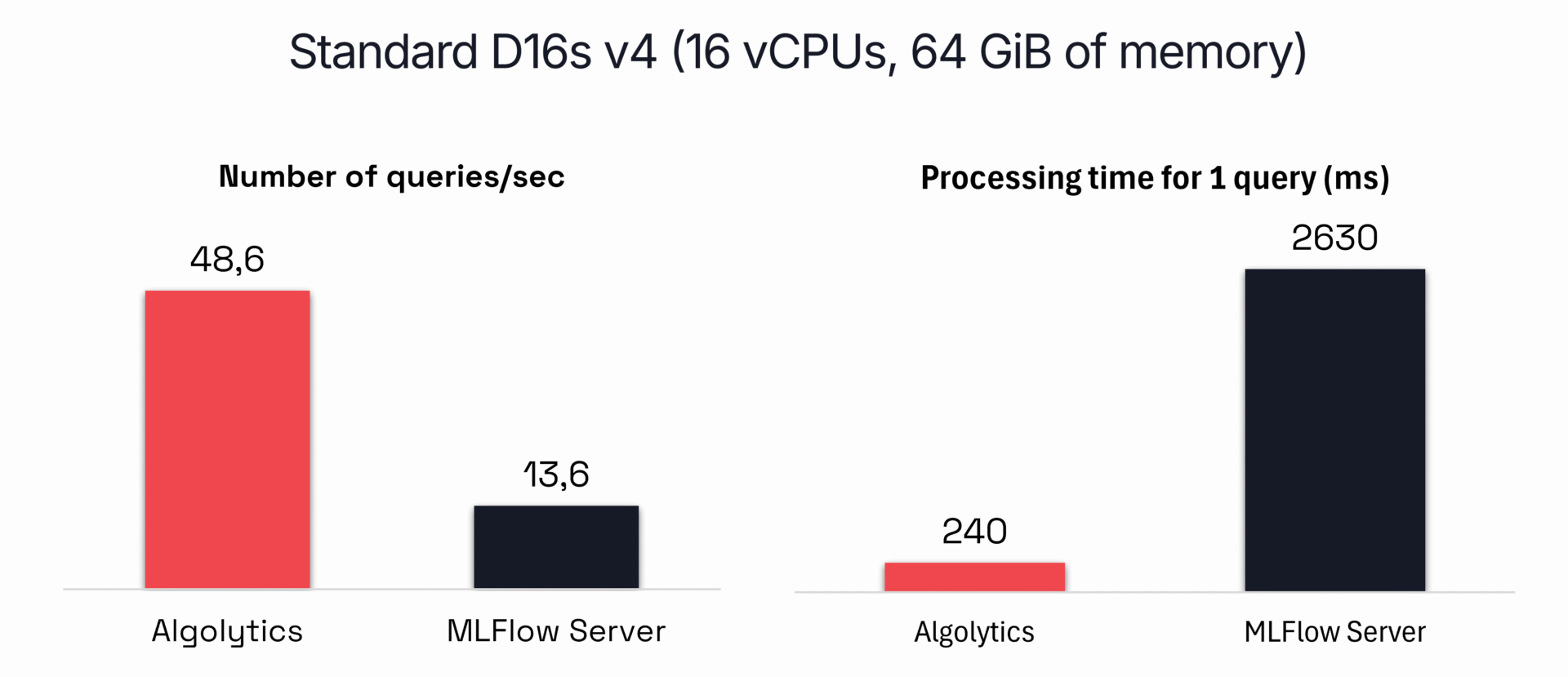

In this case, we only compared the Algolytics Platform and the open-source solution (MLFlow + XGBoost), both on the same hardware configuration.

Experiments have shown that our solution can process significantly more queries per second and in less time than MLFlow and XGBoost-based solutions.

Summary

Why is the Algolytics Platform so much more scalable than other solutions in its class?

This is due to several key practices that we follow, including training models in microbatches, using simple algorithms with appropriate data transformations, and implementing scoring code without the use of libraries.

We will discuss these techniques in the upcoming parts of the article, which I will publish soon. Additionally, we will explore how the Algolytics Platform supports analysts and data engineers. Thanks to the low-code approach, we break the competence barrier and accelerate the implementation process, which is also essential in the context of maintenance costs.