Inside the Scoring.One engine

In previous parts of this series (Part 1, Part 2), we have demonstrated that it is possible to build machine learning solutions quickly, effectively, and at a low cost, with the right tools and technological approach. We compared popular platforms and explained why Algolytics is the best performer. Now, as announced, we invite you to take a look "under the hood" at the architecture of our MLOps: Scoring.One engine.

From model to scalable application

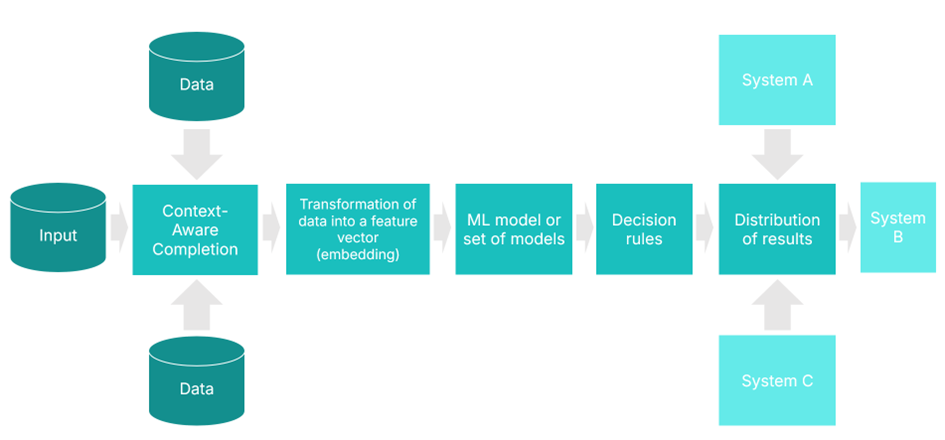

Most MLOps platforms stop at training the model and exposing it via an API or as part of a batch scoring process. At Algolytics, we see the model as just one component of a larger system. Real business value is created only when the entire process—starting with data ingestion, through feature engineering, model execution, and decision logic, all the way to integration with operational systems—is captured within a single, coherent, and optimised scenario.

Components of a machine learning-based application

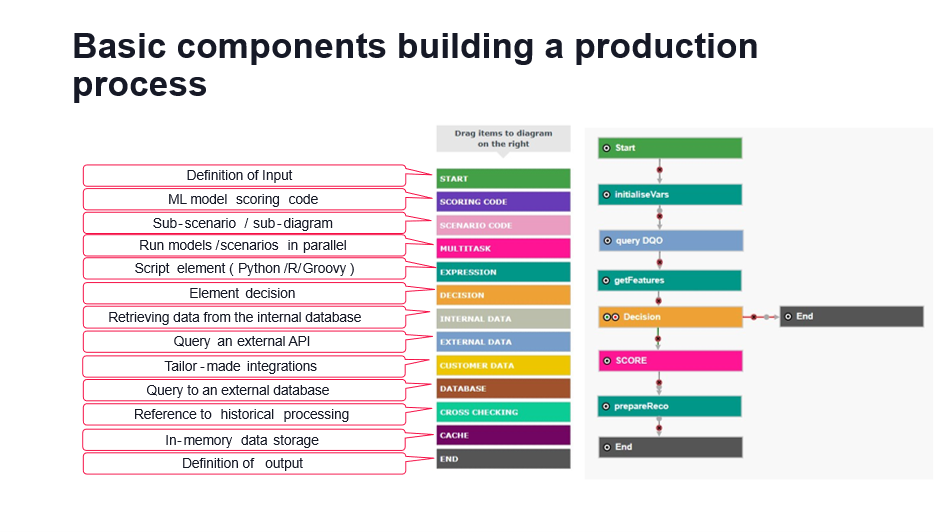

A scenario designed in the platform’s graphical editor becomes a fully functional application ready for production use. It is built from preconfigured components such as data sources, transformations, models, decision rules, sub-scenarios, API calls, and parallel operations. Importantly, it requires no costly or time-consuming implementation. At the same time, it remains fully flexible, enabling the use of custom logic written in Python, R, or Groovy.

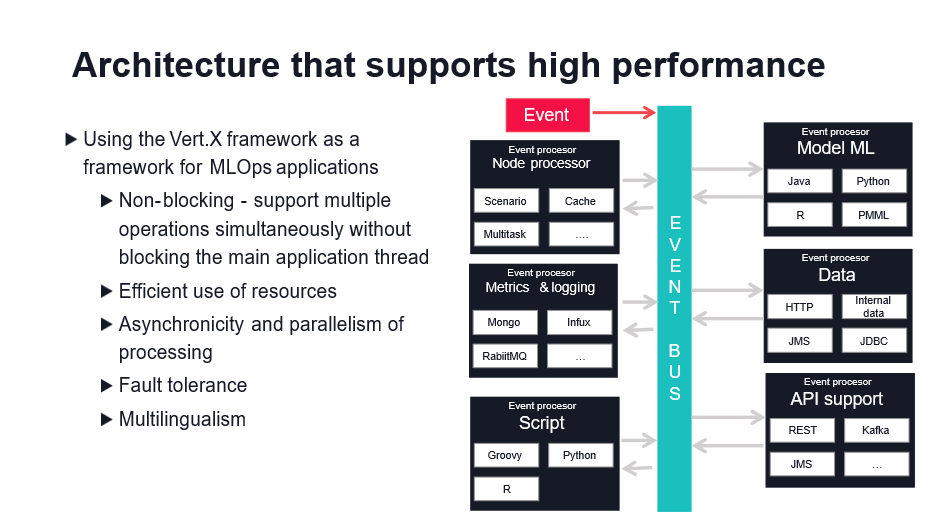

What is behind the performance of Scoring.One (MLOps)? Starting with the Foundations – Vert.x

The answer to the question about the sources of Scoring.one's (MLOps) performance and scalability leads us to the platform's technological foundation – the reactive Vert.x framework.

Unlike approaches based on Python, Docker containers, and microservices, Scoring.One (MLOps) runs as a reactive, multi-threaded JVM application capable of handling hundreds of parallel queries without duplicating runtime environments. The key lies in asynchronous, non-blocking processing of data and events—precisely what the Vert.x architecture is designed to deliver.

A scenario is not a diagram – it's a production application

The scoring scenario in our editor resembles a graphical workflow, consisting of nodes, connections, models and logical conditions. But it's not just a visual representation – it's a ready-to-run application in which each element of the scenario is implemented as an independent event processor embedded in the Vert.x environment.

Event-driven, non-blocking, massively parallel

A scoring query is treated as an event that moves through subsequent processing components: data retrieval, feature preparation, ML models, business rules, and output systems. Each processing step works:

- non-blocking – it does not wait for the completion of other operations,

- asynchronously – uses its thread pool,

- in parallel – enabling simultaneous handling of multiple queries.

As a result, a single Scoring.One (MLOps) instance can scale vertically to efficiently process large workloads, eliminating the need for horizontal scaling via container duplication.

At the same time, Scoring.One (MLOps) supports horizontal scaling by allowing additional JVM processes to be deployed across multiple machines. These instances form a distributed cluster, connected through a shared event bus and operating as a unified system, with the combined pool of verticles dynamically balancing the processing load.

Elimination of classic bottlenecks

Traditional MLOps platforms often face several limitations:

- each model runs in a separate container (which means multiple memory consumption and duplication of environments),

- communication between components takes place over the network, which generates significant delays,

- Python and GIL prevent true multithreaded processing.

Scoring.One overcomes these limitations by running the entire process within a single, optimised JVM instance. It offers a significantly simpler and more efficient environment compared to the traditional stack of Docker + Python + REST + MLflow

Resilience, monitoring and transparency

Each component works independently. In the event of an error (e.g. missing data or incorrect address), the problem is handled locally, without interrupting the entire scenario. Moreover:

- All events are logged and archived.

- Scenarios have a full history of execution,

- Each step can be run and debugged separately.

Multilingual and flexible integration

You can combine models and components written in different languages in a single scenario:

- Python, R, Java, Groovy,

- as well as PMML and code generated by the AutoML Algolytics system.

There is no need to build microservices, separate containers or complex CI/CD pipelines. Most integrations are completed using ready-made components, eliminating the need to write code.

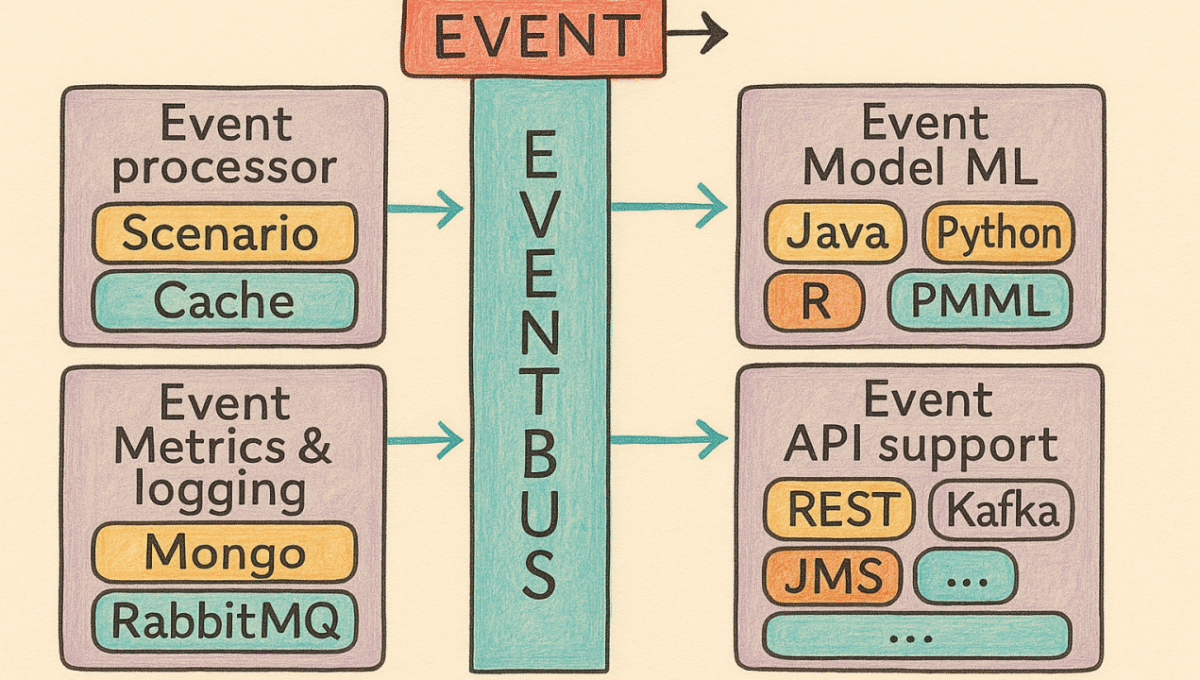

Event Bus – the communication framework of the application

Communication between Scoring.One (MLOps) components are carried out through an internal event bus – a reactive data bus. Each element of the scenario (e.g., transformation, model, API call) is a separate verticle that responds to a specific type of event and passes the result to the next component.

This architecture enables:

- The entire flow is asynchronous and non-blocking,

- The application can handle multiple requests simultaneously without compromising responsiveness.

- The system automatically copes with overload situations (back-pressure mechanisms),

- Each component can run in a different programming language (e.g., Java, Python, R), enabling full technological flexibility.

Verticle groups and their functions in Scoring.One (MLOps)

The Scoring.One architecture consists of specialised verticles, organised into functional groups:

- Model processors – run ML models written as Java classes, Python scripts, or PMML/ONNX objects.

- Script processors – allow you to execute custom logic in scripting languages (Python, Groovy, R).

- API processors – integrate with external systems (REST, Kafka, SOAP) using asynchronous I/O.

- Data processors – retrieve and write data from/to databases, queues and cache, using JDBC, HTTP and other protocols.

- Control processors – handle flow control: logical conditions, branching, multitasking, sub-scenario calls.

- Monitoring & logging processors – provide logging and real-time performance metrics (Mongo, Influx, RabbitMQ).

Each verticle works independently and is fault-tolerant – according to the principle of resilience through isolation.

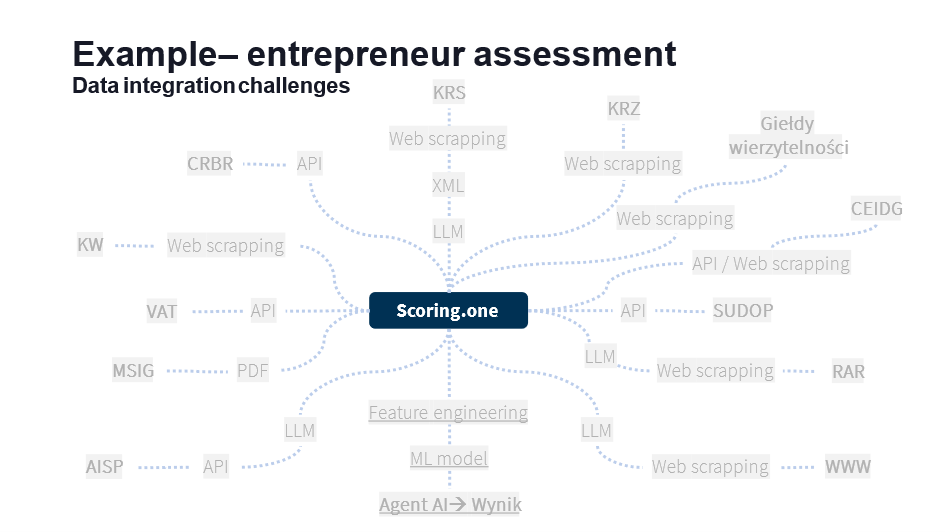

Case study: Entrepreneur assessment

The effectiveness of this architecture is best demonstrated by its production use cases, such as a complex entrepreneur assessment process implemented by leading financial and insurance organisations.

Data and processing complexity

Although at first glance, entrepreneur scoring seems simple ("Is the customer reliable?"), in reality, it requires:

- Obtain data from many public sources (e.g. KRS, CEIDG, GUS, CRBR, SUDOP, MSIG), often using WWW crawlers,

- Process unstructured documents (e.g. registration files, PDF, XML),

- Use integrated LLMs to extract data from text (e.g. analysis of register files),

- Download data from internal databases and feature stores (e.g. payment history, signals from the transaction system),

- Run several ML models and a set of expert rules,

- Deliver the scoring result to the decision-making system in real time.

In total, there are hundreds of system interactions, conditional dependencies, asynchronous queries, data transformations, and logical decisions. Implementing such a solution in the classic microservice approach means weeks of work and many points of failure.

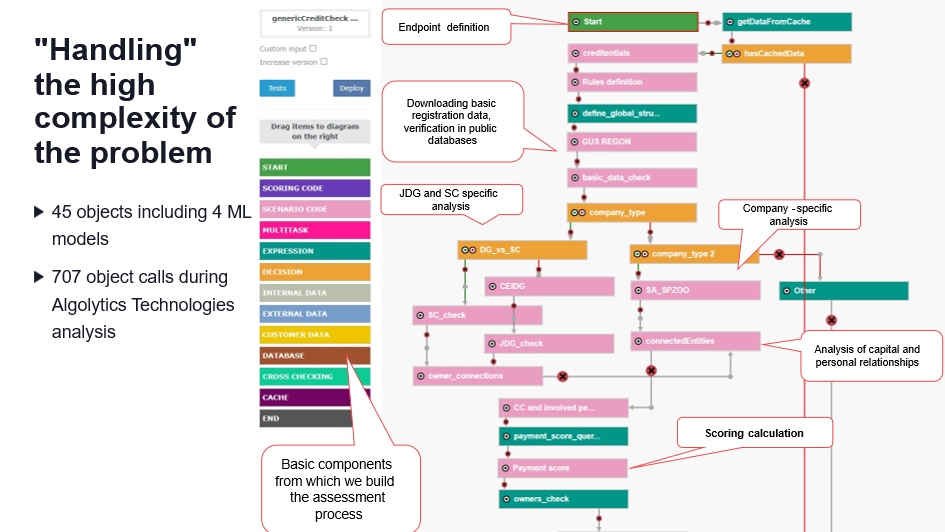

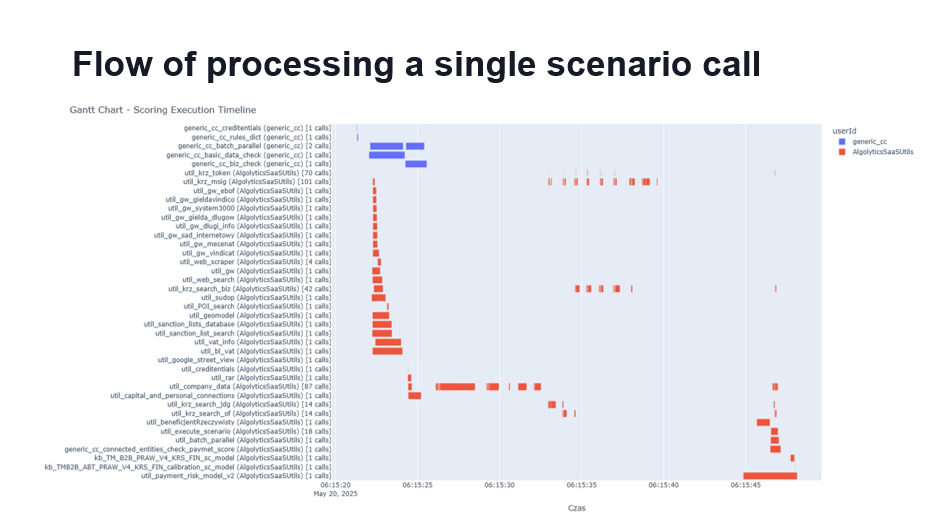

One scenario – a complex process

In Scoring.One (MLOps), the entire process is encapsulated within a single low-code scenario, leveraging dozens of sub-scenarios and hundreds of components. During an analysis conducted by Algolytics Technologies, the system executed 707 calls to various components and models, including related entities and individuals, whose data contributed to enriching the input features.

With asynchronous and parallel processing, the entire process takes less than one minute. Moreover, the platform allows you to perform multiple such assessments simultaneously.

Summary

In this section, we demonstrated the outstanding performance and cost-efficiency of Scoring.One (MLOps) stem from a deliberately designed, reactive architecture built on Vert.x. This foundation makes our scoring scenarios not only highly performant but also flexible, resilient, and production-ready. It’s a solution that truly empowers data science and IT teams—accelerating implementation, streamlining integration, and reducing infrastructure costs without compromise.