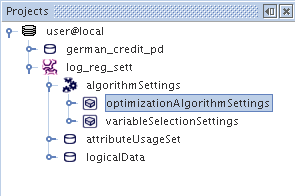

Optimization methods are used in other Data Mining algorithms. Currently they are used in IRLS Regression, LogisticRegression, TimeSeries, SurvivalAnalysis and Bivariate.

The optimization criteria should be set at the model construction stage. They are available through algorithm settings (AlgorithmSettings).

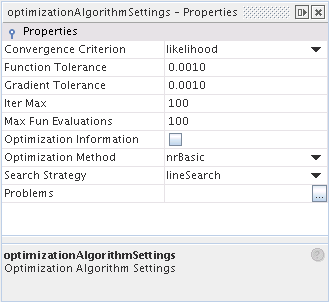

Table 6.2. Optimization Algorithm Settings

| Name | Description | Possible values | Default value |

|---|---|---|---|

| Convergence Criterion | the stop criterion for the likelihood optimization algorithm | gradient / likelihood / likelihoodRelative | likelihood |

| Function Tolerance | the stop threshold for the likelihood optimization algorithm | positive real numbers | 0.001 |

| Gradient Tolerance | the stop threshold for the gradient optimization algorithm | positive real numbers | 0.001 |

| Iter Max | the maximum number of iterations performed by the optimization algorithm | positive integer numbers | 100 |

| Max Fun Evaluations | the maximum number of function evaluations per one iteration performed by the optimization algorithm | positive integer numbers | 100 |

| Optimization Information | if TRUE' then various optimization statistics are saved; this option is only available at the script level; it does not apply to the nrBasic optimization algorithm. | TRUE / FALSE | FALSE |

| Optimization Methods | the selection of the optimization algorithm | nrBasic / optConjugateGradient / optFDNewton / optQuasiNewton / optNewton | nrBasic |

| Search Strategy | the algorithm for finding the extreme value of the function; the available algorithms come from the Opt++ library; this option does not apply to the nrBasic optimization algorithm. | lineSearch / trustRegion / trustPDS | lineSearch |

- Convergence Criterion, Function / Gradient Tolerance

These settings depend on selected estimation method.

The Newton-Raphson algorithm with Convergence Criterion set to gradient converges when the Euclidean norm of the gradient of the likelihood function is less than the value of Gradient Tolerance . If the Convergence Criterion set to likelihood or likelihoodRelative the Newton-Raphson algorithm converges when the value of the likelihood function has changed in two consecutive steps by less than the value of Function Toleranc.

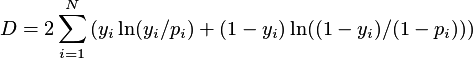

The Fisher algorithm (available only for Logistic Regression) with Convergence Criterion set to gradient stops when the value of deviance

defined by the formula:

defined by the formula:

has changed in two consecutive steps by less than the value of Gradient Tolerance.

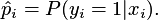

The Fisher algorithm with Convergence Criterion set to likelihood or likelihoodRelative stops when the absolute value of difference of SSE of the models from two consecutive steps has changed by less than the value of Function Tolerance . The SSE is defined by the formula:

where

In the case of IRSL Regression the Convergence Criterion is ignored snd the algoritm stops when the value of variance has changed in two consecutive steps by less than the value of Function Tolerance.

- Optimization Information

The following statistics are returned by the optimization algorithm (with the exception of nrBasic): iteration number, function value, absolute value of the function gradient, step value, number of function value evaluations. The statistics are available only at the script level.

- Optimization Methods

The following algorithms: optConjugateGradient, optFDNewton, optQuasiNewton, optNewton are decribed in the Optimization Methods section.

The nrBasic algorithm is a modification of the Newton-Raphson method with step halving.